MLCA Week 7:

Hyperparameter Followup

Mike Mahoney

2021-10-13

What are we doing here?

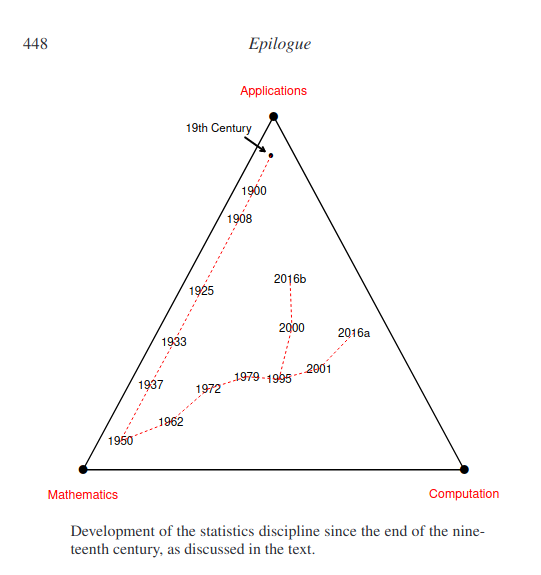

Some of the topics discussed fit within traditional statistics but others seem to have escaped, heading south, perhaps in the direction of computer science.

The escapees were the large-scale prediction algorithms: neural nets, deep learning, boosting, random forests, and support-vector machines. Notably missing from their development were parametric probability models, the building blocks of classical inference. Prediction algorithms are the media stars of the big-data era.

Statistics is a branch of applied mathematics, and is ultimately judged by how well it serves the world of applications. Mathematical logic, a la Fisher, has been the traditional vehicle for the development and understanding of statistical methods. Computation, slow and difficult before the 1950s, was only a bottleneck, but now has emerged as a competitor to (or perhaps an enabler of) mathematical analysis.

A cohesive inferential theory was forged in the first half of the twentieth century, but unity came at the price of an inwardly focused discipline, of reduced practical utility. In the century’s second half, electronic computation unleashed a vast expansion of useful—and much used—statistical methodology.

Expansion accelerated at the turn of the millennium, further increasing the reach of statistical thinking, but now at the price of intellectual cohesion.

This course attempts to guide students through several of the most common machine learning approaches at a conceptual level with a focus on applications in R.

Part 1: Prediction

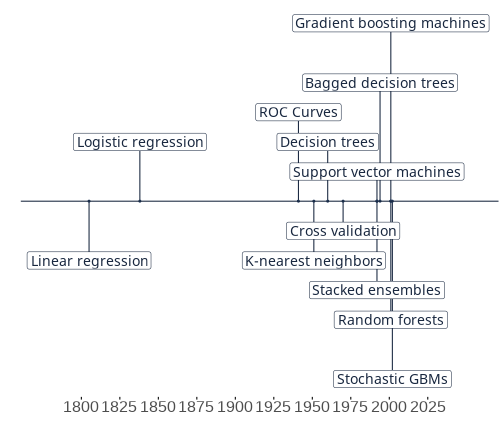

| Week | Topic |

|---|---|

| 1 | Prediction, Estimation, and Attribution |

| 2 | Regression |

| 3 | Classification |

| 4 | Classification with imbalanced classes |

Part 2: Machine Learning

| Week | Topic |

|---|---|

| 5 | Decision Trees |

| 6 | Random Forests |

| 7 | Hyperparameters and Model Tuning |

| 8 | Gradient Boosting Machines |

| 9 | Stochastic GBMs and Stacked Ensembles |

| 10 | k-Nearest Neighbors |

| 11 | Support Vector Machines (as time allows) |

Part 2: Machine Learning

| Week | Topic |

|---|---|

| 5 | Decision Trees |

| 6 | Random Forests |

| 7 | Hyperparameters and Model Tuning |

| 8 | Gradient Boosting Machines |

| 9 | Stochastic GBMs and Stacked Ensembles |

| 10 | k-Nearest Neighbors |

| 11 | Support Vector Machines (as time allows) |

Part 3: Doing The Thing

| Week | Topic |

|---|---|

| 12-13 | Project Work |

| 14 | Presentations |

A great amount of ingenuity and experimentation has gone into the development of modern prediction algorithms, with statisticians playing an important but not dominant role. There is no shortage of impressive success stories. In the absence of optimality criteria, either frequentist or Bayesian, the prediction community grades algorithmic excellence on performance within a catalog of often-visited examples.

“Optimal” is the key word here. Before Fisher, statisticians didn’t really understand estimation. The same can be said now about prediction. Despite their impressive performance on a raft of test problems, it might still be possible to do much better than neural nets, deep learning, random forests, and boosting — or perhaps they are coming close to some as-yet unknown theoretical minimum.