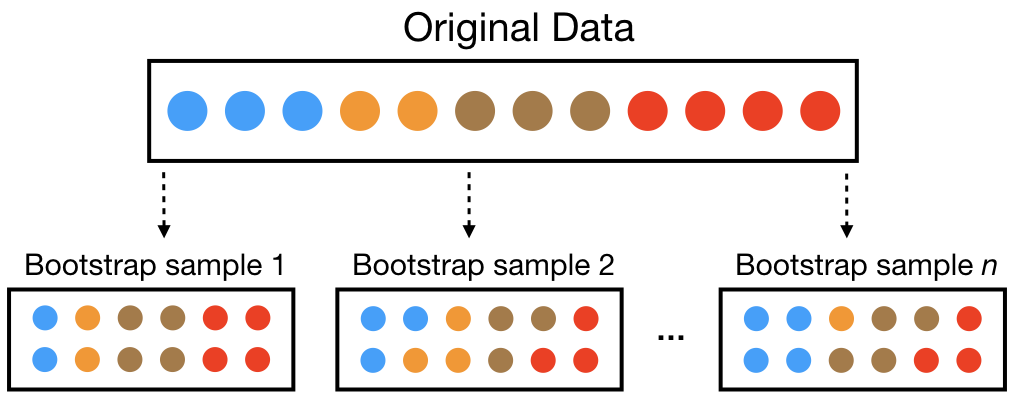

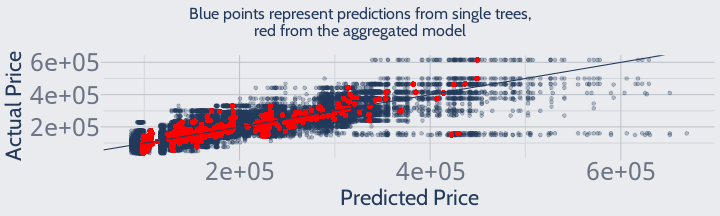

class: center, middle, inverse, title-slide # MLCA Week 6: ## Random Forests ### Mike Mahoney ### 2021-10-06 --- class: center, middle # Random Forests --- .bg-washed-green.b--dark-green.ba.bw2.br3.shadow-5.ph4.mt5[ A lot of the stuff going on [in AI] is not very ambitious. In machine learning, one of the big steps that happened in the mid-’80s was to say, “Look, here’s some real data – can I get my program to predict accurately on parts of the data that I haven’t yet provided to it?” What you see now in machine learning is that people see that as the only task. .tr[ — Stuart Russell ] ] --- class: middle Last week, we talked about how decision trees tend to overfit, producing high variance models which might change a lot if they were fit to a different training set. If we could find a way to reduce that variance, we might be able to make our model altogether more accurate. If our prediction surface had softer edges, we might be able to capture more of the actual structure of our data. And so statisticians had an idea: what if we fit decision trees to _many_ training sets, then aggregate the predictions? Each individual decision tree will still be high-variance, but maybe the average of their predictions could smooth out some of the hard thresholds. --- class: middle The first problem with this plan, of course, is that now we need more than one training set. We could split our original training set into smaller pieces, and fit separate models to each of those. However, decision trees perform better on relatively large data sets, meaning we want to be careful about breaking our training data into too many pieces. Instead, if we want to fit decision trees to many training sets, it's usually better to resample our data to create those sets. --- class: middle Usually, we'll resample our training data to create new training sets of the same size (the same number of observations/rows) as the original training data. When we do this _with replacement_ (so that each observation can be sampled more than once), we call it **bootstrapping**, and each subsample a **bootstrap sample**. <br />  (Image from [Hands-On Machine Learning with R](https://bradleyboehmke.github.io/HOML/process.html#bootstrapping)) --- class: middle Let's go ahead and make some bootstrap samples for our Ames housing data. First off, let's make the Ames data frame and split it into training and testing sets: ```r set.seed(123) ames <- AmesHousing::make_ames() row_idx <- sample(seq_len(nrow(ames)), nrow(ames)) training <- ames[row_idx < nrow(ames) * 0.8, ] testing <- ames[row_idx >= nrow(ames) * 0.8, ] ``` We can then use the `sample` function to create a bootstrap sample from our training data like so: ```r single_bootstrap <- training[sample(1:nrow(training), size = nrow(training), replace = TRUE), ] ``` --- class: middle But we don't want _one_ bootstrap sample, we want _many_. Let's go ahead and use `lapply` to run our bootstrapping code 100 times (chosen because it's a nice round number). We'll store the output as a list named `ames_bootstraps`: ```r ames_bootstraps <- lapply( 1:100, \(x) training[sample(1:nrow(training), size = nrow(training), replace = TRUE), ] ) ``` Note that `lapply` is running our code once for each value from 1 to 100, but we aren't using that value anywhere -- our resampling code takes no input arguments. --- class: middle So now we have our 100 separate bootstraps of our training data. We can use `lapply` again to fit a separate decision tree to each of those samples: ```r library(rpart) ames_trees <- lapply( ames_bootstraps, \(x) rpart(Sale_Price ~ ., x) ) ``` <br /> This time around we're actually using that "x" as an input value to pass different training data to each function. --- And just like that, we have 100 decision trees! If we wanted, we could use `rpart.plot` to visualize each of our trees. Doing so, we'd quickly notice that each tree uses different variables, has a different number of leaf nodes, and otherwise produces different predictions -- all because they're each fit to a different data set. ```r rpart.plot::rpart.plot(ames_trees[[1]]) ``` <img src="week_6_imgs/first_tree.jpeg" width="1783" /> --- class: middle We now have our 100 decision trees, and need to use them to make predictions. We can use a short loop to create 100 new columns in our testing data frame, each with the predictions from a different tree: ```r tree_testing <- testing for (i in seq_along(ames_trees)) { tree_testing[[paste0("tree_", i)]] <- predict( ames_trees[[i]], testing ) } ``` <br /> But at the end of the day, 100 different predictions isn't super useful to us. We need to combine these into a single prediction if we're going to use this model to make decisions or inform our actions. A surprisingly effective way to aggregate our 100 predictions into a final value is to simply take the mean of the individual tree predictions, which we can do with `rowMeans`: ```r library(dplyr) ``` ``` ## ## Attaching package: 'dplyr' ``` ``` ## The following objects are masked from 'package:stats': ## ## filter, lag ``` ``` ## The following objects are masked from 'package:base': ## ## intersect, setdiff, setequal, union ``` ```r tree_testing <- tree_testing |> mutate(tree_aggregate = rowMeans(across(starts_with("tree_")))) ``` --- class: middle Let's go ahead and boil those predictions down into RMSEs. First, we'll use the `summarize` function to calculate the RMSE of each decision tree's predictions (the columns that "start with" `tree_`): ```r tree_rmse <- tree_testing |> summarize( across( starts_with("tree_"), ~ sqrt(mean((.x - tree_testing$Sale_Price)^2)) ) ) ``` <br /> Then we'll use the `pivot_longer` function to take our super-wide table and make it "long", so our RMSE values are stored in a single column. We'll also sort the table by RMSE so that our most-successful models are on top: ```r library(tidyr) tree_rmse <- tree_rmse |> pivot_longer(everything(), values_to = "RMSE") |> arrange(RMSE) ``` (If you haven't seen `pivot_longer` before, I recommend section 12.3 of [R for Data Science](https://r4ds.had.co.nz/tidy-data.html#pivoting)). --- class: middle And finally, let's look at the first few rows of RMSE: ```r head(tree_rmse) ``` ``` ## # A tibble: 6 × 2 ## name RMSE ## <chr> <dbl> ## 1 tree_aggregate 35252. ## 2 tree_10 36457. ## 3 tree_29 36886. ## 4 tree_81 36955. ## 5 tree_70 37305. ## 6 tree_84 37430. ``` <br /> Our aggregated model (the mean of all other tree predictions) is dramatically better than the component trees, with an RMSE that's over $1,000 lower. --- class: middle This method that we just walked through is called **bootstrap aggregation**, or **bagging** for short. It can be done with any model, not just decision trees, and works just as well for classification as regression (though classifiers often aggregate using the _most common_ predicted class instead of the mean predicted probability). At its core, bagging improves predictions by reducing the variance of the model, just as we discussed last week. Look for instance at this graph of the predictions of our bagged model (red points) compared to the component models (blue): <br /> <!-- --> Our aggregated model has much less "spread" in its predictions than the decision trees, with similar sale prices getting generally similar predictions. --- class: middle It's worth comparing the results from our bagged model to what we'd get from just a single decision tree: ```r single_tree <- rpart(Sale_Price ~ ., training) sqrt(mean((predict(single_tree, training) - training$Sale_Price)^2)) ``` ``` ## [1] 38055.45 ``` ```r head(tree_rmse, 1) ``` ``` ## # A tibble: 1 × 2 ## name RMSE ## <chr> <dbl> ## 1 tree_aggregate 35252. ``` So our aggregated model is a pretty large improvement over the single decision tree approach. --- class: middle And it's worth noting that this improvement isn't just because our component trees happened to be better fits. <br /> In fact, a lot of the individual trees are actually _worse_ predictors than our non-aggregated tree: ```r tail(tree_rmse) ``` ``` ## # A tibble: 6 × 2 ## name RMSE ## <chr> <dbl> ## 1 tree_9 43322. ## 2 tree_74 43334. ## 3 tree_93 43631. ## 4 tree_1 43917. ## 5 tree_56 46506. ## 6 tree_25 47496. ``` <br /> We call these individual decision trees, which aren't much better than randomly guessing sale prices, **weak learners**. --- class: middle The theory here is similar to the idea that if you have a bunch of people guess how many jelly beans are in a jar, the average of everyone's guesses is probably going to be a lot closer than any individual guess. <br /> In this sort of situation, having higher variance in your guesses is helpful to improve predictions. <br /> You don't necessarily need any given person person to be an incredible guesser, but you're hoping that someone else in the crowd is guessing equally badly in the opposite direction so that all the predictions average out to the "truth". <br /> You can improve your predictive accuracy just by combining weak learners. --- Which begs the question: can we add even _more_ variance to our component trees? Right now we have 100 decision trees, each fit using different training data and the same predictors. What if we started using different _predictors_ for each, as well? What if, for instance, we fit each tree on a different random 40% of predictors? Let's try it and find out! First, we'll get a vector of all our predictors: ```r ames_names <- setdiff(names(ames), "Sale_Price") ``` And then we can fit our trees using almost exactly the same code as we used for bagging. The only difference is that we'll subset our training data to use a random 40% of variables each time: ```r random_variable_trees <- lapply( ames_bootstraps, \(x) { # Select a random 40% of variables (and Sale_Price) x <- x[ c(sample(ames_names, size = length(ames_names) * 0.4), "Sale_Price") ] rpart(Sale_Price ~ ., x) } ) ``` (40%, just like 100 trees earlier, is just a round number I chose at random.) --- We can use almost exactly the same code as earlier to find our RMSE: ```r tree_testing <- testing for (i in seq_along(random_variable_trees)) { tree_testing[[paste0("tree_", i)]] <- predict( random_variable_trees[[i]], testing ) } tree_testing <- tree_testing |> mutate(tree_aggregate = rowMeans(across(starts_with("tree_")))) tree_rmse <- tree_testing |> summarize( across( starts_with("tree_"), ~ sqrt(mean((.x - tree_testing$Sale_Price)^2)) ) ) tree_rmse <- tree_rmse |> pivot_longer(everything(), values_to = "RMSE") |> arrange(RMSE) ``` --- class: middle ```r head(tree_rmse, 3) ``` ``` ## # A tibble: 3 × 2 ## name RMSE ## <chr> <dbl> ## 1 tree_aggregate 30999. ## 2 tree_67 36065. ## 3 tree_41 37721. ``` <br /> So we've _dramatically_ improved on our bagged model, just by fitting each tree to a different random subset of our predictors. Increasing the variance of our trees results in better aggregated predictions, even as the individual trees become worse. --- class: middle But can we go further? What if instead of fitting each _tree_ to a different set of variables, we chose a random group of variables for each _split_? This way each tree could still potentially include every variable, but we'd force the trees to vary by restricting their choices when splitting. This technique is what we call the **random forest** algorithm. Originally introduced in a seminal 2001 paper by Leo Breiman ([link](https://link.springer.com/article/10.1023/A:1010933404324)), the random forest is one of the first true machine learning methods and is still in common use today. Rather than find a way to select random variables at each split, we're going to use the `ranger` package to make random forests in this course. Go ahead and install it now, then load it: ```r install.packages("ranger") library(ranger) ``` --- class: middle We can then use the `ranger` function to fit our random forests, using the same syntax as with `lm`: ```r ames_rf <- ranger(Sale_Price ~ ., training) ``` That `ranger` call will create 500 decision trees, fit to bootstrap samples, using random variable subsets for each split, incredibly quickly. We can then use `predict` to get our predictions for the test set -- but note that we need to wrap this function in the `predictions` function from `ranger` to get actual numeric predictions: ```r rf_predictions <- predictions(predict(ames_rf, testing)) ``` We can then use those to calculate RMSE: ```r sqrt(mean((rf_predictions - tree_testing$Sale_Price)^2)) ``` ``` ## [1] 23143.89 ``` Immediately, out-of-the-box, the random forest _dramatically_ out-performs our best bagged tree model, to say nothing of our simple decision trees and linear regressions we've used in the past. --- But just like with decision trees, random forests have **hyperparameters** -- values which can be very important in influencing our predictions but can't be automatically determined when fitting your model to the training data. For instance, we can use the `min.node.size` hyperparameter to control how deep we want each of our trees to grow. Just like the `minbucket` argument to `rpart`, this value sets how many observations each leaf node needs to have, controlling how deep our trees can get. For instance, we might notice an improvement in accuracy if we set our min node size to 1, letting each decision tree grow as deep as needed to classify every observation: ```r min_node <- ranger(Sale_Price ~ ., training, min.node.size = 1) rf_predictions <- predictions(predict(min_node, testing)) sqrt(mean((rf_predictions - tree_testing$Sale_Price)^2)) ``` ``` ## [1] 22735.54 ``` --- There are a few other hyperparameters which can have sizable impacts on our random forests, namely: + `mtry`: How many variables should be considered at each split? + `num.trees`: How many decision trees should be aggregated? + `sample.fraction`: What percent of observations should be sampled for each tree? If set to 1 and `replace` is set to TRUE, the random forest will use bagged trees like we walked through earlier, but it's often a good idea to mess with the sampling. + `replace`: Sample observations with replacement? Choosing smart values for these hyperparameters can give us pretty notable improvements over the default model: ```r new_params <- ranger(Sale_Price ~ ., training, num.trees = 800, mtry = 20, min.node.size = 1, replace = FALSE, sample.fraction = 1) rf_predictions <- predictions(predict(new_params, testing)) sqrt(mean((rf_predictions - tree_testing$Sale_Price)^2)) ``` ``` ## [1] 21467.52 ``` --- So... how do we pick smart values for hyperparameters? Short answer: trial and error. For the long answer: We'll pick that up next week. --- One last note for this week. While we've focused entirely on regression today, all of the techniques we've gone over can be easily applied to classification problems as well. To aggregate our trees, we can either average our class probabilities across each tree, or just take the most common class predicted by the individual trees. As a quick example, let's load in our `attrition` data and make train/test splits: ```r library(modeldata) data(attrition) row_idx <- sample(seq_len(nrow(attrition)), nrow(attrition)) training <- attrition[row_idx < nrow(attrition) * 0.8, ] testing <- attrition[row_idx >= nrow(attrition) * 0.8, ] ``` To fit a random forest classifier, we can use exactly the same syntax as with regression: ```r rf_classifier <- ranger(Attrition ~ ., data = training) ``` Everything we've talked about with classification in the past -- sensitivity and specificity, dealing with imbalanced classes, and so on -- still applies when dealing with random forests. I've included the confusion matrix for this classifier on the next page, so we can see fit statistics for this model. Note in particular how non-sensitive our model is -- it does a _really_ bad job at predicting people who _do_ quit. We'd need to either weight our classes, balance our data, or set a different threshold for this model. --- ```r caret::confusionMatrix( predictions(predict(rf_classifier, testing)), testing$Attrition, positive = "Yes") ``` ``` ## Confusion Matrix and Statistics ## ## Reference ## Prediction No Yes ## No 247 37 ## Yes 1 10 ## ## Accuracy : 0.8712 ## 95% CI : (0.8275, 0.9072) ## No Information Rate : 0.8407 ## P-Value [Acc > NIR] : 0.08546 ## ## Kappa : 0.3027 ## ## Mcnemar's Test P-Value : 1.365e-08 ## ## Sensitivity : 0.21277 ## Specificity : 0.99597 ## Pos Pred Value : 0.90909 ## Neg Pred Value : 0.86972 ## Prevalence : 0.15932 ## Detection Rate : 0.03390 ## Detection Prevalence : 0.03729 ## Balanced Accuracy : 0.60437 ## ## 'Positive' Class : Yes ## ``` --- class: middle title # References --- As usual, titles are links: + [Hands-on Machine Learning](https://bradleyboehmke.github.io/HOML/bagging.html) provides a lot of the content for this week; specifically chapter 10 (bagging), 11 (random forests), and section 2.4 (the bootstrap) + [CASI](https://web.stanford.edu/~hastie/CASI_files/PDF/casi.pdf) section 10.2 (bootstrapping) and 17.1 (random forests) This week also uses a few seminal papers which are worth knowing about: + [Bootstrap Methods for Standard Errors, Confidence Intervals, and Other Measures of Statistical Accuracy](https://projecteuclid.org/journals/statistical-science/volume-1/issue-1/Bootstrap-Methods-for-Standard-Errors-Confidence-Intervals-and-Other-Measures/10.1214/ss/1177013815.full) covers the essentials of the bootstrap + [Random decision forests](https://ieeexplore.ieee.org/document/598994) introduced random variable selection for decision trees. Note that this is _not_ random forests as we think of them today, but rather the "use random features per-tree" method we walked through. + [Random Forests](https://link.springer.com/article/10.1023/A:1010933404324), the paper that introduced the random forest as it's used today (this one is worth reading) Also, I should mention that normally you'd use the [`boot` package](https://cran.r-project.org/package=boot) to take bootstrap samples, rather than writing this yourself via lapply. The manual method made more sense for the demonstration today, but is less efficient than running through `boot`.